2023-10-03 00:18:22

In last 24 months, the way I write code has changed big time.

First, I've been using Copilot for almost two years now. I don't think about it anymore. It's already part of how I write code. It works.

How much faster am I because of Copilot?

That's a tricky question, but I estimate between 20% - 40% faster.

Copilot, however, is the tip of the iceberg.

I don't remember the last time I went to Stack Overflow. ChatGPT took that place in my workflow. Zero negativity, and it gives me precise answers about my codebase.

Here is everything I do with ChatGPT:

• It gives me ideas about which unit tests to write

• It helps me find dumb bugs I missed

• It explains code I don't understand

• It helps me write code in unfamiliar languages

• It writes about 80% of the documentation I need

• It gives me ideas on how to improve my code

• It mentions corner cases I should cover

• It answers my "How do I do this?" questions

I don't have access to the multi-modal version yet. I've seen some impressive demos where ChatGPT generates an application from a diagram. I can't wait to try it.

I've reviewed dozens of tools over the past year. Most build on top of Large Language Models to help developers. Here are some of the most notable areas these tools tackle:

• Smart assistants that integrate with notebooks and your IDE. Some of these go beyond what Copilot does. There's even a fork of a popular IDE that promises an AI-first experience.

• Assistant integrated with database servers. You can use them to build Machine Learning models without leaving your database.

• Assistants that help with code reviews. They offer suggestions, look for security vulnerabilities, and help with documentation.

None of these was possible two years ago.

I wrote my first line of code in 2012. I don't remember a period where I improved the way I write code that much.

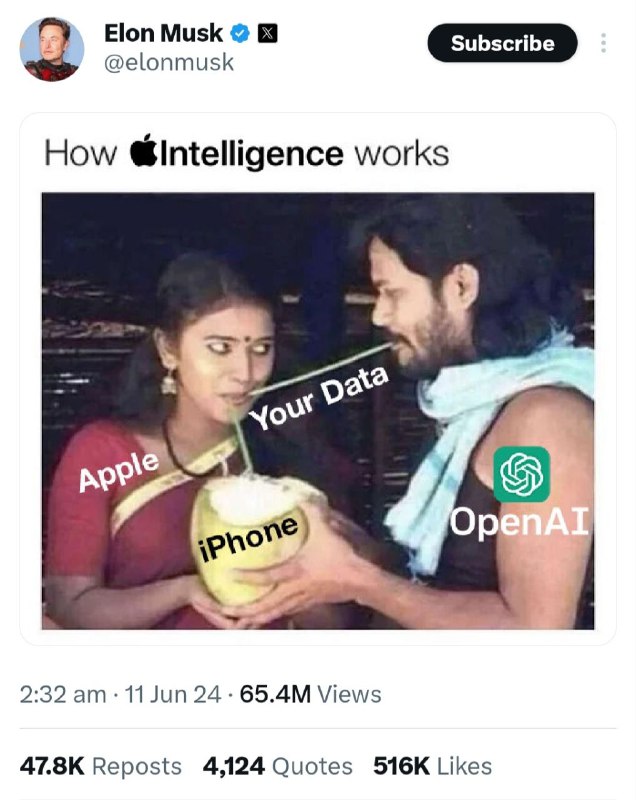

These tools aren't perfect. They automate many tasks but are far from replacing me as a professional. Artificial Intelligence is not better, but it gives me superpowers.

Remember that AI will not replace you. A person using AI will.

14.3K viewsedited 21:18